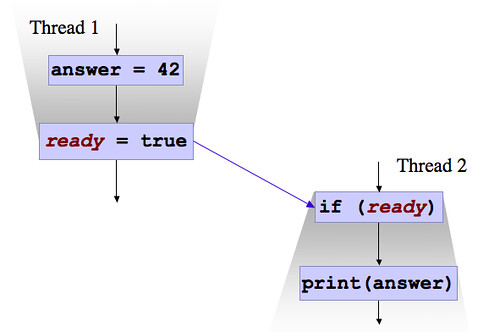

As a reminder, basically, a data race is when you have one (or more) writes, and potentially some reads; they are all to the same memory location; they can happen at the same time; and that there is nothing in the program to prevent it. This is different from a race condition, which is when you just don't know the order in which two actions are going to occur. I've put more discussion of what a data race actually is at the bottom of this post.

A lot of people think that it is okay to have a data races all over your code. By and large, it is a lot more dangerous than those people think it is. For example, the version of double checked locking that doesn't use volatile has a data race in it. As I've discussed before, that version is broken.

So, having said that, when can you have a harmless data race in your code? Here's some code from Sun's implementation of

java.lang.String, which is available under the GPL:

public final class String {

/** The value is used for character storage. */

private final char value[];

/** The offset is the first index of the storage that is used. */

private final int offset;

/** The count is the number of characters in the String. */

private final int count;

/** Cache the hash code for the string */

private int hash; // Default to 0

public int hashCode() {

int h = hash;

if (h == 0) {

int off = offset;

char val[] = value;

int len = count;

for (int i = 0; i < len; i++) {

h = 31*h + val[off++];

}

hash = h;

}

return h;

}

// ...

}

hashCode(). If it hasn't calculated the hash code yet, then it does, and then stores the value for future use. The people who wrote String didn't bother to calculate the hash code in the constructor, because that would make construction (which is done all the time) more expensive. Because Strings are immutable, we don't need to worry about the hash code potentially changing in the future.Do you see the potential data race? Here's some code that triggers it:

class BenignDataRace {

final String s = "blah";

public void main() {

class CalcHash implements Runnable {

public void run() {

int h = s.hashCode();

}

}

Thread t = new Thread(new CalcHash());

Thread t2 = new Thread(new CalcHash());

t.start();

t2.start();

}

}

s.hash without doing synchronization, and at least one of those threads is going to write to it. Item 71 in Effective JavaThere are basically two parts to the answer, and they are both pretty simple:

- In this case, we don't care how many times we actually calculate the answer and assign it to the

hashfield. It just isn't a big deal if we happen to calculate it multiple times. Furthermore, - Java has a special guarantee for fields that are 32-bit or fewer and object references — a thread will always see some value that was assigned to that field. As a result of this, a thread reading

hashwill either see 0 or the calculated value. Edited to add: I just noticed that this was misleading. An object reference will point to the right object, but the contents of the object aren't guaranteed to be correct unless the object is immutable. Please see my series on immutability.

hash were a long or a double.Now, let's break the code:

public int hashCode() {

if (hash == 0) {

int off = offset;

char val[] = value;

int len = count;

int h = 0;

for (int i = 0; i < len; i++) {

h = 31*h + val[off++];

}

hash = h;

}

return hash;

}

What I've done here is to add an additional read: the second read of hash, before the return. As odd as it sounds, and as unlikely as it is to happen, the first read can return the correctly computed hash value, and the second read can return 0! This is allowed under the memory model because the model allows extensive reordering of operations. The second read can actually be moved, in your code, so that your processor does it before the first!

(Side note: the first version is better anyway, because it performs fewer memory reads. If you really care that much about performance, that's the way to go.)

What's the moral here? The moral is that this stuff is very confusing, and you have to work very hard to get it right. Make sure you understand exactly what your code does. Avoid data races wherever possible.

Here are the details on data races that I promised above:

A program has a data race in it when it is possible to have an execution of that program where:

- All of the instructions in that program occur in the order they occurred in the program (this is important for technical reasons),

- There are two accesses to the same field / array element,

- At least one of those accesses is a write, and

- There is no happens-before ordering between those actions.

I defined "happens-before ordering" in my last post. Basically, it means that there is nothing establishing an order between the two actions; for example, there is no locking protecting the accesses, no volatile writes and reads intervening and no thread starts and joins.